Nvidia A100 Review

How NVIDIA's A100 GPU is Revolutionizing AI and High-Performance Computing

Introduction

In the rapidly evolving world of artificial intelligence (AI) and high-performance computing (HPC), NVIDIA's A100 GPU has emerged as a game-changer. This cutting-edge graphics processing unit is designed to tackle the most demanding workloads, from deep learning and data analytics to scientific simulations. In this comprehensive blog post, we'll dive into the key features, performance benchmarks, and real-world applications of the A100, exploring how it's revolutionizing industries and pushing the boundaries of what's possible with GPU technology.

The Power of the A100

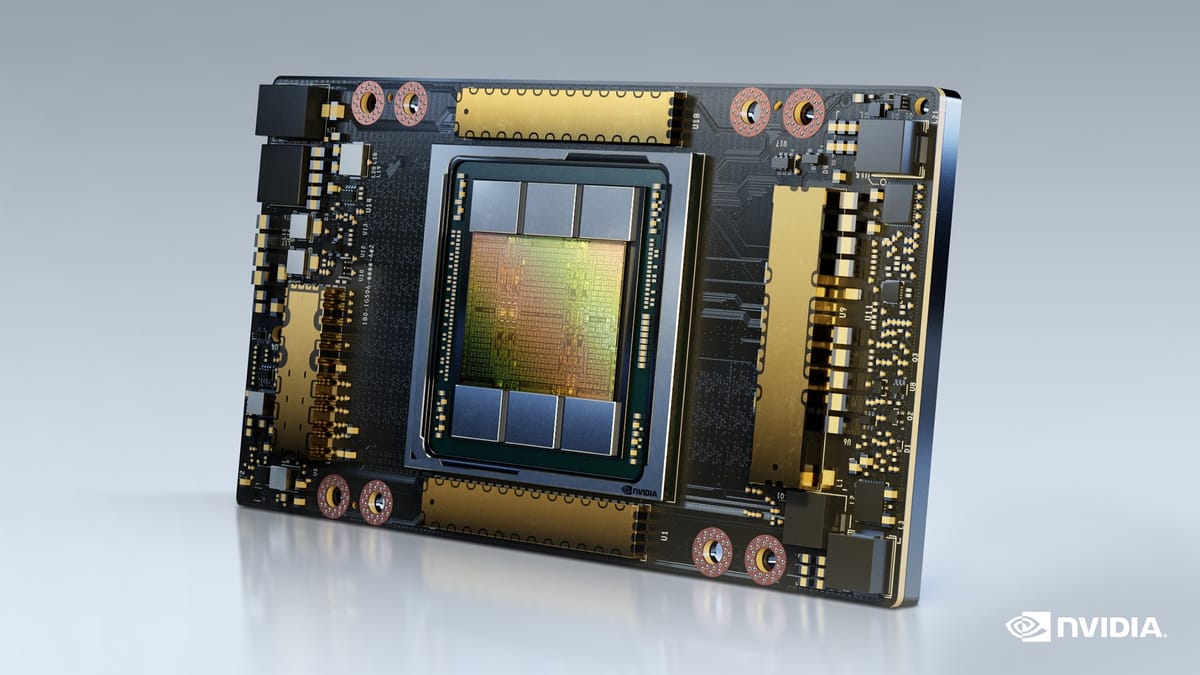

At the heart of the A100's exceptional performance lies its impressive specifications. With 54.2 billion transistors packed onto a single chip, 6,912 CUDA cores, and 432 Tensor cores, this GPU is a computational powerhouse. The A100 boasts 40GB of high-bandwidth HBM2E memory, delivering a staggering 1,555 GBps of memory bandwidth. These specs translate into unparalleled performance across a wide range of applications.

1. Unprecedented Performance

- OctaneBench Results: The A100 has shattered records on the OctaneBench benchmark, scoring an impressive 446 points—11.2% higher than the previous record holder, the NVIDIA Titan V. This showcases the A100's raw computational power and its ability to handle complex rendering tasks with ease.

- ResNet-50 Performance: In deep learning workloads, the A100 truly shines. It can process an astounding 2,400 images per second on the ResNet-50 model, significantly outpacing consumer GPUs like the RTX 3090, which manages around 1,400 images per second.

"The A100's exceptional capabilities have made it the GPU of choice for cutting-edge AI research, scientific simulations, and data analytics across various industries."

2. Multi-Instance GPU (MIG) Technology

One of the A100's most innovative features is its Multi-Instance GPU (MIG) technology. MIG allows the A100 to be partitioned into up to seven separate GPU instances, each with dedicated hardware resources. This enables multiple users or workloads to run simultaneously on a single A100, maximizing utilization and efficiency. MIG is transparent to CUDA programs, making it easy to integrate into existing workflows.

3. Advanced Connectivity and Memory Management

- NVLink and PCIe Gen 4: The A100 supports high-speed NVLink connectivity between GPUs, enabling low-latency communication and massive throughput of up to 600GB/s bidirectional. Additionally, PCIe Gen 4 support doubles the transfer speeds compared to the previous generation.

- Enhanced Memory Management: With advanced memory management techniques like fine-grained memory access and error-correcting code (ECC) support, the A100 ensures data integrity and optimal performance in memory-intensive applications.

Real-World Applications and Impact

The A100's exceptional capabilities have made it the GPU of choice for cutting-edge AI research, scientific simulations, and data analytics across various industries.

1. AI and Deep Learning

- Language Models: The A100 has become the backbone of large-scale language model training. Its massive computational power enables researchers to train models with billions of parameters, pushing the boundaries of natural language understanding and generation.

- Computer Vision: In computer vision tasks like object detection and image segmentation, the A100's Tensor cores accelerate deep learning inference, enabling real-time processing of high-resolution images and videos.

2. Scientific Simulations

- Weather and Climate Modeling: The A100's high-performance computing capabilities have revolutionized weather and climate simulations. By leveraging the GPU's parallel processing power, scientists can run more accurate and detailed models, leading to better predictions and understanding of our planet's complex systems.

- Molecular Dynamics: In fields like drug discovery and materials science, the A100 accelerates molecular dynamics simulations, allowing researchers to study the behavior of molecules at an unprecedented scale and speed.

Photo by Backpack Studio on Unsplash

3. Data Analytics and Big Data

- Accelerated Databases: The A100's massive memory bandwidth and parallel processing capabilities have transformed big data analytics. Databases like Apache Spark and Oracle Exadata have leveraged the A100 to achieve significant performance gains, enabling faster insights from massive datasets.

- Fraud Detection: In the financial industry, the A100's computing power is being harnessed for real-time fraud detection. By analyzing vast amounts of transactional data in real-time, financial institutions can identify and prevent fraudulent activities more effectively.

Conclusion

NVIDIA's A100 GPU has set a new standard for performance and versatility in AI and high-performance computing. With its unparalleled computational power, innovative features like MIG, and advanced connectivity options, the A100 is driving breakthroughs across industries. As researchers and businesses continue to push the limits of what's possible with GPU technology, the A100 will undoubtedly play a crucial role in shaping the future of AI, scientific discovery, and data-driven insights.

As we look ahead, it's clear that the A100 is just the beginning of a new era in GPU computing. NVIDIA's commitment to innovation and its relentless pursuit of performance will continue to drive advancements in AI and HPC, unlocking new possibilities and transforming the way we solve complex problems. Whether you're a researcher, data scientist, or business leader, the A100 is a powerful tool that can help you stay at the forefront of your field and achieve groundbreaking results.

Comments ()